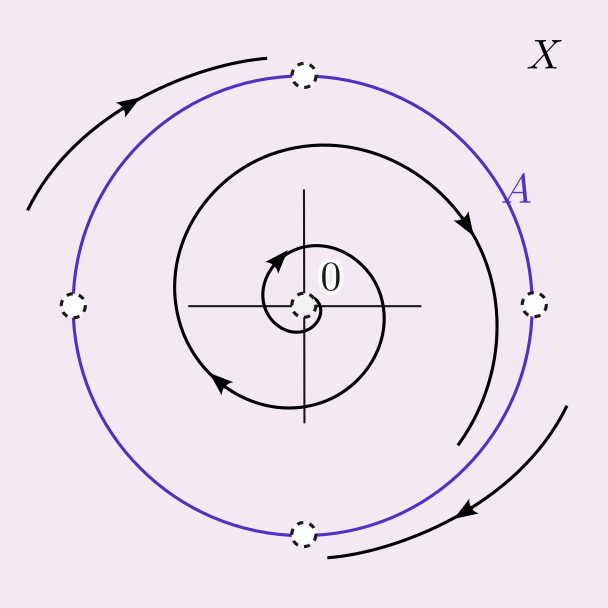

A search for structure.

Hi, my name is Wout (01) and I am a postdoctoral researcher at KTH Stockholm and Digital Futures (02). I am broadly interested in the mathematical side of systems & control theory (03), in particular, I am fascinated by the synergy with topology and questions that arise in the context of synthetic biology. Feel free to reach out.

More information