Almost Surely Investigations in Optimal Control and Dynamical Systems,

Hi, my name is Wout and I am a researcher broadly interested in the mathematical side of systems & control theory.

This page functions as a (web)log of results I find interesting.

Feel free to reach me in case of any hints, tips, tricks, mistakes, ideas or any other comment.

Last summer, I received my PhD in Electrical Engineering from EPFL, working with Daniel Kuhn, as part of the NCCR Automation (a consortium between ETH Zürich, EPFL, EMPA and more). Before moving to Lausanne, I received a MSc in Systems & Control from TU Delft, working with Peyman Mohajerin Esfahani.

During the spring of 2024, I visited KTH Stockholm, the Division of Decision and Control Systems, hosted by Mikael Johansson.

Since August 2024, I joined the Applied Mathematics department (INMA) at UCLouvain, as part of the larger Information and Communication Technologies, Electronics and Applied Mathematics (ICTEAM) institute, working with Raphael Jungers as a postdoctoral fellow.

‘‘He had one big idea and … that was the idea of relating many kinds of mathematical and scientific problems to the study of vector fields on manifolds and the properties of the related flows. That was his central theme and central mechanism.’’ p. 494 Browder on Smale (1993)

|

Communities |July 02, 2024|

tags: math.OC

As the thesis is printed, it is time to reflect.

Roughly 1 year ago I got the wonderful advice from Roy Smith that whatever you do, you should be part of a community. This stayed with me as four years ago I wrote on my NCCR Automation profile page that “Control problems of the future cannot be solved alone, you need to work together.” and I finally realized — may it be in retrospect — what this really means, I was being kindly welcomed to several communities.

Just last week at the European Control Conference (ECC24), Angela Fontan and Matin Jafarian organized a lunch session on peer review in our community and as Antonella Ferrara emphasized throughout, you engage in the process to contribute to the community, your community. As such, a high quality — and sustainable — conference is intimately connected to the feeling of community. Especially since the conference itself feeds back into our feeling of community (as I am sure you know, everything is just feedback loops..).

ECC24 was a fantastic example when it comes to strengthening that feeling of community and other examples that come to mind are the inControl podcast by Alberto Padoan, Autonomy Talks by Gioele Zardini, the IEEE CSS magazine, the IEEE CSS road map 2030 and recently, the KTH reading group on Control Theory: Twenty-Five Seminal Papers (1932-1981) organized by Miguel Aguiar. The historical components are important not only to better understand our community (the why, how and what), but to always remember we build upon our predecessors, we are part of the same community. Of course, the NCCR Automation contributed enormously and far beyond Switzerland (fellowships, symposia, workshops, …), but even closer to home, ever since the previous century, the Dutch Institute of Systems and Control (DISC) has done a remarkable job bringing and keeping all researchers and students together (MSc programs, a national graduate school, Benelux meetings, …).

The importance of community is sometimes linked to being in need of recognition and to working more effective when in competition. I am sure this is true for some and I am even more sure this is enforced in fields where research is expensive and funding is scarce. Yet, that vast majority of members of the scientific community are not PIs, but students, and for us the community is critically important to create and enforce a sense of belonging. The importance of belonging is widely known — not only in the scientific community — and most of the problems we have here on earth can be directly linked to a lack of belonging and community (as beautifully formulated in Pale Blue Dot by Carl Sagan).

Celebrating personal successes is of great importance, we need our heros, but I want to be part of a scientific community where we focus on progress of the community, what did we solve, what are our open problems, and how do we go about solving them? Looking back, I am happy to see it seems I am part of such a community indeed. Thank you!

Weekend read |May 14, 2023|

tags: math.OC

The ‘‘Control for Societal-scale Challenges: Road Map 2030’’ by the IEEE Control Systems Society (Eds. A. M. Annaswamy, K. H. Johansson, and G. J. Pappas) was published a week ago. This roadmap is a remarkable piece of work, over 250 pages, an outstanding list of authors and coverage of almost anything you can imagine, and more.

If you somehow found your way to this website, then I can only strongly recommend reading this document. Despite many of us being grounded in traditional engineering disciplines, I do agree with the sentiment of this roadmap that the most exciting (future) work is interdisciplinary, this is substantiated by many examples from biology. Better yet, it is stressed that besides familiarizing yourself with the foundations, it is of quintessential importance (and fun I would say) to properly dive into the field where you hope to apply these tools.

‘‘Just because you can formulate and solve an optimization problem does not mean that you have the correct or best cost function.’’ p. 32

Section 4.A on Learning and Data-Driven Control also contains many nice pointers, sometimes alluding to a slight disconnect between practice and theory.

‘‘Sample complexity may only be a coarse measure in this regard, since it is not just the number of samples but the “quality” of the samples that matters.’’ p. 142

The section on safety is also inspiring, just stability will not be enough anymore. However, the most exciting part for me was Chapter 6 on education. The simple goal of making students excited early-on is just great. Also, the aspiration to design the best possible material as a community is more than admirable.

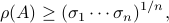

Standing on the shoulders of giants |April 3, 2023|

tags: math.OC

One of the most illuminating activities one can undertake is to go back in time and see how the giants in (y)our field shaped the present. Sadly, several of our giants passed away recently and I want to highlight one of them in particular: Roger W. Brockett.

It is very rare to find elegant and powerful theories in sytems and control that are not related to Brockett somehow, better yet, many powerful theories emerged directly from his work. Let me highlight five topics/directions I find remarkably beautiful:

(Lie algebras): As put by Brockett himself, it is only natural to study the link between Lie theory and control theory since the two are intimately connected through differential equations |1.2|. ‘‘Completing this triangle’’ turned out to be rather fruitful, in particular via Frobenius’ theorem. Brockett played a key role in bringing Lie theoretic methods to control theory, a nice example is his 1973 paper on control systems on spheres (in general, his work on bilinear systems was of great interest) |5|.

(Differential geometric methods): Together with several others, Brockett was one of the first to see how differential geometric methods allowed for elegantly extending ideas from linear control (linear algebra) to the realm of nonlinear control. A key event is the 1972 conference co-organized with David Mayne |1|. See also |2| for a short note on the history of geometric nonlinear control written by Brockett himself.

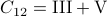

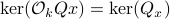

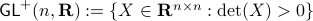

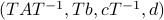

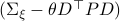

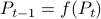

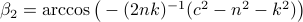

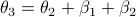

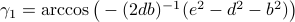

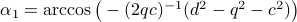

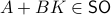

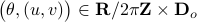

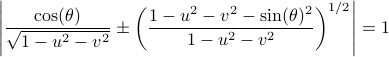

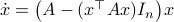

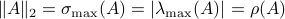

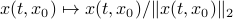

(Brockett's condition): After pioneering work on (local) nonlinear controllability in the 70s it was observed (by means of low-dimensional counterexamples) that controllability is not sufficient for the existence of a stabilizing continuous feedback. This observation was firmly established in the (1982) 1983 paper by Brockett |3| where he provides his topological necessary condition (Theorem 1 (iii)) for the existence of a stabilizing differentiable feedback, i.e.

must be locally onto (the same is true for continuous feedback). Formally speaking, this paper is not the first (see Geometrical Methods of Nonlinear Analysis and this paper by Zabczyk), yet, this paper revolutionized how to study necessary conditions for nonlinear stabilization and inspired an incredible amount of work.

must be locally onto (the same is true for continuous feedback). Formally speaking, this paper is not the first (see Geometrical Methods of Nonlinear Analysis and this paper by Zabczyk), yet, this paper revolutionized how to study necessary conditions for nonlinear stabilization and inspired an incredible amount of work.

(Nonlinear control systems): Although we are still far from the definitive control system (modelling) framework, Brockett largely contributed to a better understanding of structure. Evidently, this neatly intertwines with the previous points on bilinear systems (Lie algebras) and differential geometry, however, let me also mention that the fiber bundle perspective (going beyond Cartesian products) is often attributed to Brockett |4|.

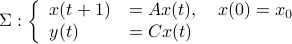

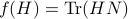

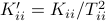

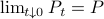

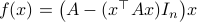

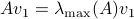

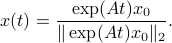

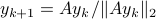

(Dynamical systems perspective on optimization): We see nowadays still more and more work on the continuous-time (and system theoretic) viewpoint with respect to optimization algorithms. One can argue that Brockett was also of great importance here. It is not particularly surprising that one can study gradient flows to better understand gradient descent algorithms, however, it turned out that one can understand a fair amount of routines from (numerical) linear algebra through the lens of (continuous-time) dynamical systems. Brockett initiated a significant part of this work with his 1988 paper on the applications of the double bracket equation

![dot{H}=[H,[H,N]]](eqs/229228239358215717-130.png) |6|. For a more complete overview, including a foreword by Brockett, see Optimization and Dynamical Systems.

|6|. For a more complete overview, including a foreword by Brockett, see Optimization and Dynamical Systems.

References (all by Brockett).

|1|: Geometric Methods in System Theory - Proceedings of the NATO Advanced Study Institute held at London, England, August 27-Septernber 7, 1973, ed. with Mayne, D. Reidel Publishing Company (1973).

|1.2|: Chapter: Lie Algebras and Lie groups in Control Theory in |1|.

|2|: The early days of geometric nonlinear control, Automatica (2014).

|3|: Asymptotic stability and feedback stabilization, Differential Geometric Control Theory, ed. with Millman and Sussmann, Birkhäuser (1983).

|4|: Control theory and analytical mechanics, Geometric Control Theory, Lie Groups: History,

Frontiers and Applications, Vol. VII, ed. Martin and Hermann, Math Sci Press, (1976).

|5|: Lie Theory and Control Systems Defined on Spheres, SIAM Journal on Applied Mathematics (1973).

|6|: Dynamical systems that sort lists and solve linear programming problems, IEEE CDC (1988).

See also this 2022 interview (video) with John Baillieul and the foreword to this book for more on the person behind the researcher.

On Critical Transitions in Nature and Society |29 January 2023|

tags: math.DS

The aim of this post is to highlight the 2009 book Critical Transitions in Nature and Society by Prof. Scheffer, which is perhaps more timely than ever and I strongly recommend this book to essentially anyone.

Far too often we (maybe not you) assume that systems are simply reversible, e.g. based on overly simplified models (think of a model corresponding to a flow). The crux of this book is that for many ecosystems such a reversal might be very hard or even practically impossible. Evidently, this observation has many serious ramifications with respect to understanding and tackling climate change.

Mathematically, this can be understood via bifurcation theory, in particular, via folds and cusps, introducing a form of hysteresis. The hysteresis is important here, as this means that simply restoring some parameters of an ecosystem does not directly imply that the state of the ecosystem will return to what these parameters were previously corresponding to.

The book makes a good point that catastrophe- and chaos theory (old friends of bifurcation theory) themselves went through a critical transition, that is, populair media (and some authors) blew-up those theories. To that end, see the following link for a crystal clear introduction to catastrophe theory by Zeeman (including some controversy), as well as this talk by Ralph Abraham on the history (locally) of chaos theory. One might argue that some populair fields these days are also trapped in a similar situation.

Scheffer stays away from drawing overly strong conclusions and throughout the book one can find many discussions on how and if to relate these models to reality. Overall, Scheffer promotes system theoretic thinking. For example, at first sight we might be inclined to link big (stochastic) events, e.g. a meteor hitting the earth, as the cause of some disruptive event, while actually something else might been slowly degrading, e.g. some basin of attraction shrunk, and this stochastic event was just the final push. This makes the book very timely, climate change is slowly changing ecosystems around us, we are just waiting for a detrimental push. To give another example, in Chapter 5 we find an interesting discussion on the relation between biodiversity and stability. Scheffer highlights two points: (i) robustness (there is plently back-up) and (ii) complementation (if many can perform a task, some of them will be very good at it). Overall, the discussions on ‘‘why we have so many animals’’ are very interesting.

One of the main concepts in the book is that of resilience, which is (re)-defined several times (as part of the storyline in the book), in particular, one page 103 we find it being defined as ‘‘the capacity of a system to absorb disturbance and reorganize while undergoing change so as to still retain essentially the same function, structure, identity, and feedbacks.’’ Qualitatively, this is precisely a combination of having an attractor of some system being structurally stable. However, the goal here is to quantify this structural stability to some extent. Indeed, one enters bifurcation theory, or if you like, topological dynamical systems theory.

Throughout, Scheffer provides incredibly many examples of great inspiration to anyone in dynamical system and control theory, e.g. the basin of attraction, multistability and time-separations are recurring themes. My favourite simple example (of positive feedback) being the ice-Albedo feedback, (too) simply put, less ice means less reflection of light and hence more heat absorption, resulting in even less ice (as such, the positive feedback). More details can be found in Chapter 8. Another detailed series of examples is contained in Chapter 7 on shallow lakes. This chapter explains through a qualitative lens why restoring lakes is inherently difficult. Again, (too) simply put, as with ice, if plants disappear in lakes, turbidity is promoted, making it more difficult for plants to return (they need sunlight and thus prefer clear water). For this lake model, one can find some equations in Appendix 12, which is essentially a Lotka-Volterra model. As such, some readers might be unsatisfied when it comes to the mathematical content*. Moreover, at times Scheffer himself describes bifurcations as exotic dynamical systems jargon and throughout the book one might get the feeling that the dynamical systems community has no clue how to handle time-varying models. Luckily, the latter is not completely true. Perhaps the crux here is that we can use more cross-contamination between scientific communities. Especially recognizing these bifurcations (critical transitions), not just in models, but in reality is still a big and pressing open problem.

*A fantastic and easy-going short book on catastrophe theory is written, of course, by Arnold.

The aim of this book was to have a widely accesible mathematical reference. The introduction to this book reads as: ‘‘This booklet explains what catastrophe theory is about and why it arouses such controversy. It also contains non-controversial results from the mathematical theories of singularities and bifurcation.’’

whereas Arnold ends with: ‘‘A qualitative peculiarity of Thom's papers on catastrophe theory is their original style: as one who presenses the direction of future investigations, Thom not only does not have proofs available, but does not even dispose of the precise formulations of his results. Zeeman, an ardent admirer of this style, observes that the meaning of Thom's words becomes clear only after inserting 99 lines of your own between every two of Thom's.’’

Counterexamples 1/N |9 January 2023|

tags: math.An

As nicely felt through the 1964 book Counterexamples in Analysis by Gelbaum and Olmsted, mathematics is not all about proving theorems, equally important is a large collection of (counter)examples to sharpen our understanding of these theorems. This attitude towards mathematics can also be found in books by Arnold, Halmos and many others.

This short post highlights a few of my favourite examples from the book by Gelbaum and Olmsted.

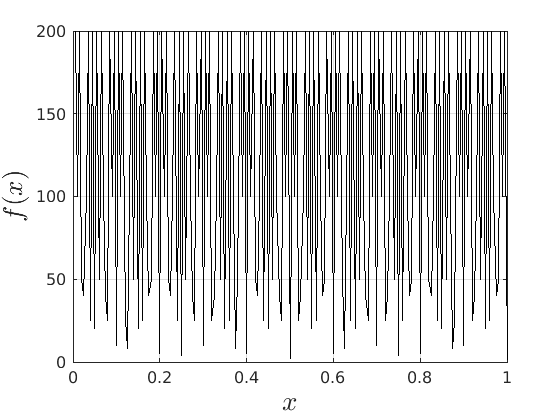

A function that is everywhere finite and everywhere locally unbounded.

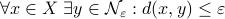

A function  is locally bounded when for each point in its domain one can find a neighbourhood

is locally bounded when for each point in its domain one can find a neighbourhood  such that

such that  is bounded on

is bounded on  .

Now consider the function

.

Now consider the function  defined by

defined by

Now assume that we could locally bound  on

on  (some open neighbourhood in

(some open neighbourhood in  ), that means that

), that means that  is bounded on such a neighbourhood and hence, so is

is bounded on such a neighbourhood and hence, so is  .

However, that would imply that

.

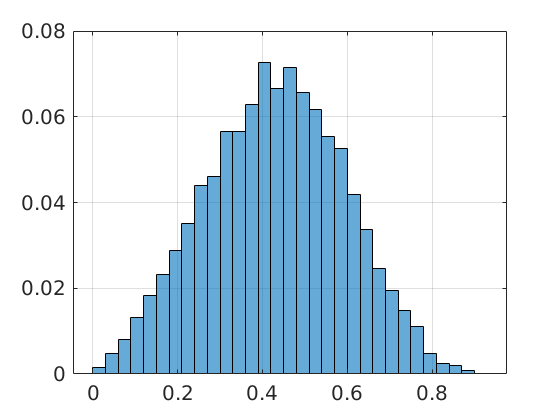

However, that would imply that  only contains finitely many rational numbers! We plot

only contains finitely many rational numbers! We plot  (approximately) below over

(approximately) below over ![[0,1]](eqs/5113014419904972428-130.png) for just

for just  points and observe precisely what one would expect.

points and observe precisely what one would expect.

|

Two disjoint closed sets that are at a zero distance.

This example is not that surprising, but it nicely illustrates the pitfall of thinking of sets as ‘‘blobs’’ (in Dutch we would say potatoes) in the plane.

Indeed, the counterexample can be interpreted as an asymptotic result.

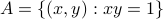

Consider  , this set is closed as

, this set is closed as  is continuous. Then, take

is continuous. Then, take  , this set is again closed and clearly

, this set is again closed and clearly  and

and  are disjoint. However, since we can write

are disjoint. However, since we can write  , then as

, then as  , we find that

, we find that  approaches

approaches  arbitrarily closely. One can create many more examples of this form, e.g., using logarithms.

arbitrarily closely. One can create many more examples of this form, e.g., using logarithms.

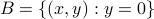

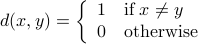

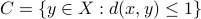

An open and closed metric ball with the same center and radius such that the closure of the open ball is unequal to the closed ball.

This last example is again not that surprising if you are used to go beyond Euclidean spaces. Let  be a metric space under the metric

be a metric space under the metric

Now assume that  is not simply a singleton. Then, let

is not simply a singleton. Then, let  be the open metric ball of radius

be the open metric ball of radius  centered at

centered at  and similarly, let

and similarly, let  be the closed metric ball of radius

be the closed metric ball of radius  centered at

centered at  . Indeed,

. Indeed,  whereas

whereas  . Now to construct the closure of

. Now to construct the closure of  , we must first understand the topology induced by the metric

, we must first understand the topology induced by the metric  . In other words, we must understand the limit points of

. In other words, we must understand the limit points of  . Indeed,

. Indeed,  is the discrete metric and this metric induces the discrete topology on

is the discrete metric and this metric induces the discrete topology on  . Hence, it follows — which is perhaps the counterintuitive part, that

. Hence, it follows — which is perhaps the counterintuitive part, that  and hence

and hence  . This example also nicely shows a difference between metrics and norms. As norms satisfy

. This example also nicely shows a difference between metrics and norms. As norms satisfy  (absolute homogeneity) one cannot have the discontinuous behaviour from the example above.

(absolute homogeneity) one cannot have the discontinuous behaviour from the example above.

Further examples I particularly enjoyed are a discontinuous linear function, a function that is discontinuous in two variables, yet continuous in both variables separately and all examples related to Cantor. This post is inspired by TAing for Analysis 1 and will be continued in the near future.

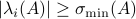

On ‘‘the art of the state’’ |29 December 2022|

tags: math.DS

In the last issue of the IEEE Control Systems Magazine, Prof. Sepulchre wrote an exhilarating short piece on the art of the state, or as he (also) puts it ‘‘the definite victory of computation over modeling in science and engineering’’. As someone with virtually no track record, I am happy to also shed my light on the matter.

We owe dynamical systems theory as we know it today largely to Henri Poincaré (1854-1912), whom started this in part being motivated by describing the solar system. Better yet, the goal was to eventually understand its stability. However, we can be a bit more precise regarding what was driving all this work. In the introduction of Les méthodes nouvelles de la mécanique céleste (EN: The new methods of celestial mechanics) - Vol I by Poincaré, published in 1892 (HP92), we find (loosely translated): ‘‘The ultimate goal of Celestial Mechanics is to resolve this great question of whether Newton's law alone explains all astronomical phenomena; the only way to achieve this is to make observations as precise as possible and then compare them with the results of the calculation.’’ and then after a few words on divergent series: ‘‘these developments should attract the attention of geometers, first for the reasons I have just explained and also for the following: the goal of Celestial Mechanics is not reached when one has calculated more or less approximate ephemerides without being able to realize the degree of approximation obtained. If we notice, in fact, a discrepancy between these ephemerides and the observations, we must be able to recognize if Newton's law is at fault or if everything can be explained by the imperfection of the theory.’’ It is perhaps interesting to note that his 1879 thesis entitled: Sur les propriétés des fonctions définies par les équations aux différences partielles (EN: On the properties of functions defined by partial difference equations) was said to lack examples (p.109 FV12).

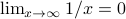

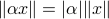

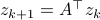

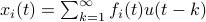

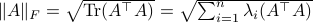

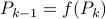

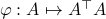

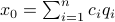

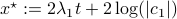

In the 3 Volumes and well over a 1000 pages of the new celestial mechanics Poincaré develops a host of tools towards the goal sketched above. Indeed, this line of work contributed to the popularizing of the infamous equation

or as used in control theory:  , for

, for  some ‘‘update’’ operator. However, I would like to argue that the main issue with Equation

some ‘‘update’’ operator. However, I would like to argue that the main issue with Equation  is not that it is frequently used, but how it is used, moving from the qualitative to the quantitative.

is not that it is frequently used, but how it is used, moving from the qualitative to the quantitative.

Unfortunately we cannot ask him, but I believe Poincaré would wholeheartedly agree with Sepulchre. Inspired by the beautiful book by Prof. Verhulst (FV12) I will try to briefly illustrate this claim. As put by Verhulst (p.80 FV12): ‘‘In his writings, Poincaré was interested primarily in obtaining insight into physical reality and mathematical reality — two different things.’’ In fact, the relation between analysis and physics was also the subject of Poincaré’s plenary lecture at the first International Congress of Mathematicians, in Zurich (1897). (p.91 FV12).

In what follows we will have a closer look at La Valeur de la Science (EN: The value of science) by Poincaré, published in 1905 (HP05). This is one of his more philosophical works. We already saw how Poincaré was largely motivated by a better understanding of physics. We can further illustrate how he — being one of the founders of many abstract mathematical fields — thought of physics. To that end, consider the following two quotes:

’’when physicists ask of us the solution of a problem, it is not a duty-service they impose upon us, it is on the contrary we who owe them thanks.’’ (p.82 HP05)

‘‘Fourier's series is a precious instrument of which analysis makes continual use, it is by this means that it has been able to represent discontinuous functions; Fourier invented it to solve a problem of physics relative to the propagation of heat. If this problem had not come up naturally, we should never have dared to give discontinuity its rights; we should still long have regarded continuous functions as the only true functions.’’ (p.81 HP05)

What is more, this monograph contains a variety of discussions of the form: ’’If we assume A, B and C, what does this imply?’’ Both mathematically and physically. For instance, after a discussion on Carnot's Theorem (principle) — or if you like the ramifications of a global flow — Poincaré writes the following:

‘‘On this account, if a physical phenomenon is possible, the inverse phenomenon should be equally so, and one should be able to reascend the course of time. Now, it is not so in nature, …’’ (p.96 HP05)

However, Poincaré continues and argues that although notions like friction seem to lead to an irreversible process this is only so because we look at the process through the wrong lens.

‘‘For those who take this point of view, Carnot's principle is only an imperfect principle, a sort of concession to the infirmity of our senses; it is because our eyes are too gross that we do not distinguish the elements of the blend; it is because our hands are too gross that we can not force them to separate; the imaginary demon of Maxwell, who is able to sort the molecules one by one, could well constrain the world to return backward.’’ (p.97 HP05)

Indeed, the section is concluded by stating that perhaps we should look through the lens of statistical mechanics. All to say that if the physical reality is ought the be described, this must be on the basis of solid principles. Let me highlight another principle: conservation of energy. Poincaré writes the following:

‘‘I do not say: Science is useful, because it teaches us to construct machines. I say: Machines are useful, because in working for us, they will some day leave us more time to make science.’’ (p.88 HP05)

‘‘Well, in regard to the universe, the principle of the conservation of energy is able to render us the same service. The universe is also a machine, much more complicated than all those of industry, of which almost all the parts are profoundly hidden from us; but in observing the motion of those that we can see, we are able, by the aid of this principle, to draw conclusions which remain true whatever may be the details of the invisible mechanism which animates them.’’ (p.94 HP05)

Then, a few pages later, his brief story on radium starts as follows:

‘‘At least, the principle of the conservation of energy yet remained to us, and this seemed more solid. Shall I recall to you how it was in its turn thrown into discredit?’’ (p.104 HP05)

Poincaré his viewpoint is to some extent nicely captured by the following piece in the book by Verhulst:

‘‘Consider a mature part of physics, classical mechanics (Poincaré 02). It is of interest that there is a difference in style between British and continental physicists. The former consider mechanics an experimental science, while the physicists in continental Europe formulate classical mechanics as a deductive science based on a priori hypotheses. Poincaré agreed with the English viewpoint, and observed that in the continental books on mechanics, it is never made clear which notions are provided by experience, which by mathematical reasoning, which by conventions and hypotheses.’’ (p.89 FV12)

In fact, for both mathematics and physics Poincaré spoke of conventions, to be understood as a convenience in physics. Overall, we see that Poincaré his attitude to applying mathematical tools to practical problems is significantly more critical than what we see nowadays in some communities. For instance:

‘‘Should we simply deduce all the consequences, and regard them as intangible realities? Far from it; what they should teach us above all is what can and what should be changed.’’ (p.79 HP05)

A clear example of this viewpoint appears in his 1909 lecture series at Göttingen on relativity:

‘‘Let us return to the old mechanics that admitted the principle of relativity. Instead of being founded on experiment, its laws were derived from this fundamental principle. These considerations were satisfactory for purely mechanical phenomena, but the old mechanics didn’t work for important parts of physics, for instance optics.’’ (p.209 FV12)

Now it is tempting to believe that Poincaré would have liked to get rid of ‘‘the old’’, but this is far from the truth.

‘‘We should not have to regret having believed in the principles, and even, since velocities too great for the old formulas would always be only exceptional, the surest way in practise would be still to act as if we continued to believe in them. They are so useful, it would be necessary to keep a place for them. To determine to exclude them altogether would be to deprive oneself of a precious weapon.’’ (p.111 HP05)

The previous was about the ‘‘old’’ and ‘‘new’’ mechanics and how differences in mathematical and physical realities might emerge. Most and for all, the purpose of the above is to show that one of the main figures in abstract dynamical system theory — someone that partially found the field motivated by a computational question — greatly cared about correct modelling.

This is one part of the story. The second part entails the recollection that Poincaré his work was largely qualitative. Precisely this qualitative viewpoint allows for imperfections in the modelling process. However, this also means conclusions can only be qualitative. To provide two examples; based on the Hartman-Grobman theorem and the genericity of hyperbolic equilibrium points it is perfectly reasonable to study linear dynamical systems, however, only to understand local qualitative behaviour of the underlying nonlinear dynamical system. The aim to draw quantitative conclusions is often futile and simply not the purpose of the tools employed. Perhaps more interesting and closer to Poincaré, the Lorenz system, being a simplified mathematical model for atmospheric convection, is well-known for being chaotic. Now, weather-forecasters do use this model, not to predict the weather, but to elucidate why their job is hard!

Summarizing, I believe the editorial note by Prof. Sepulchre is very timely as some have forgotten that the initial dynamical systems work by Poincaré and others was to get a better understanding, to adress questions, to learn from, not to end with. Equation (1) is not magical, but a tool to build the bridge, a tool to see if and where the bridge can be built.

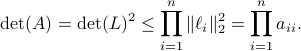

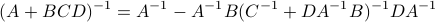

On the complexity of matrix multiplications |10 October 2022|

tags: math.LA

Recently, DeepMind published new work on ‘‘discovering’’ numerical linear algebra routines. This is remarkable, but I leave the assesment of this contribution to others. Rather, I like to highlight the earlier discovery of Strassen. Namely, how to improve upon the obvious matrix multiplication algorithm.

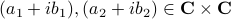

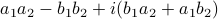

To get some intuition, consider multiplying two complex numbers  , that is, construct

, that is, construct  . In this case, the obvious thing to do would lead to 4 multiplications, namely

. In this case, the obvious thing to do would lead to 4 multiplications, namely  ,

,  ,

,  and

and  . However, consider using only

. However, consider using only  ,

,  and

and  . These multiplications suffice to construct

. These multiplications suffice to construct  (subtract the latter two from the first one). The emphasis is on multiplications as it seems to be folklore knowledge that multiplications (and especially divisions) are the most costly. However, note that we do use more additive operations. Are these operations not equally ‘‘costly’’? The answer to this question is delicate and still changing!

(subtract the latter two from the first one). The emphasis is on multiplications as it seems to be folklore knowledge that multiplications (and especially divisions) are the most costly. However, note that we do use more additive operations. Are these operations not equally ‘‘costly’’? The answer to this question is delicate and still changing!

When working with integers, additions are indeed generally ‘‘cheaper’’ than multiplications. However, oftentimes we work with floating points. Roughly speaking, these are numbers  characterized by some (signed) mantissa

characterized by some (signed) mantissa  , a base

, a base  and some (signed) exponent

and some (signed) exponent  , specifically,

, specifically,  . For instance, consider the addition

. For instance, consider the addition  . To perform the addition, one normalizes the two numbers, e.g., one writes

. To perform the addition, one normalizes the two numbers, e.g., one writes  . Then, to perform the addition one needs to take the appropriate precision into account, indeed, the result is

. Then, to perform the addition one needs to take the appropriate precision into account, indeed, the result is  . All this example should convey is that we have sequential steps. Now consider multiplying the same numbers, that is,

. All this example should convey is that we have sequential steps. Now consider multiplying the same numbers, that is,  . In this case, one can proceed with two steps in parallel, on the one hand

. In this case, one can proceed with two steps in parallel, on the one hand  and on the other hand

and on the other hand  . Again, one needs to take the correct precision into account in the end, but we see that by no means multiplication must be significantly slower in general!

. Again, one needs to take the correct precision into account in the end, but we see that by no means multiplication must be significantly slower in general!

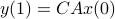

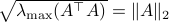

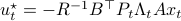

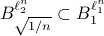

Now back to the matrix multiplication algorithms. Say we have

![A = left[begin{array}{ll} A_{11} & A_{12} A_{21} & A_{22} end{array}right],quad B = left[begin{array}{ll} B_{11} & B_{12} B_{21} & B_{22} end{array}right],](eqs/6805404554309501197-130.png)

then,  is given by

is given by

![C = left[begin{array}{ll} C_{11} & C_{12} C_{21} & C_{22} end{array}right]= left[begin{array}{ll} A_{11}B_{11}+A_{12}B_{21} & A_{11}B_{12}+A_{12}B_{22} A_{21}B_{11}+A_{22}B_{21} & A_{21}B_{12}+A_{22}B_{22} end{array}right].](eqs/6225513400863626014-130.png)

Indeed, the most straightforward matrix multiplication algorithm for  and

and  costs you

costs you  multiplications and

multiplications and  additions.

Using the intuition from the complex multiplication we might expect we can do with fewer multiplications. In 1969 (Numer. Math. 13 354–356) Volker Strassen showed that this is indeed true.

additions.

Using the intuition from the complex multiplication we might expect we can do with fewer multiplications. In 1969 (Numer. Math. 13 354–356) Volker Strassen showed that this is indeed true.

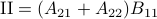

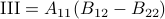

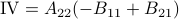

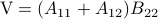

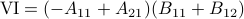

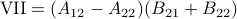

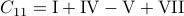

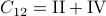

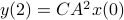

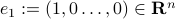

Following his short, but highly influential, paper, let  ,

,  ,

,  ,

,  ,

,  ,

,  and

and  . Then,

. Then,  ,

,  ,

,  and

and  .

See that we need

.

See that we need  multiplications and

multiplications and  additions to construct

additions to construct  for both

for both  and

and  being

being  -dimensional. The ‘‘obvious’’ algorithm would cost

-dimensional. The ‘‘obvious’’ algorithm would cost  multiplications and

multiplications and  additions. Indeed, one can show now that for matrices of size

additions. Indeed, one can show now that for matrices of size  one would need

one would need  multiplications (via induction). Differently put, one needs

multiplications (via induction). Differently put, one needs  multiplications.

This bound prevails when including all operations, but only asymptotically, in the sense that

multiplications.

This bound prevails when including all operations, but only asymptotically, in the sense that  . In practical algorithms, not only multiplications, but also memory and indeed additions play a big role. I am particularly interested how the upcoming DeepMind algorithms will take numerical stability into account.

. In practical algorithms, not only multiplications, but also memory and indeed additions play a big role. I am particularly interested how the upcoming DeepMind algorithms will take numerical stability into account.

*Update: the improved complexity got immediately improved.

A corollary |6 June 2022|

tags: math.DS

The lack of mathematically oriented people in the government is an often observed phenomenon |1|. In this short note we clarify that this is a corollary to ‘‘An application of Poincare's recurrence theorem to adademic adminstration’’, by Kenneth R. Meyer. In particular, since the governmental system is qualitatively the same as that of academic administration, it is also conservative. However - due to well-known results also pioneered by Poincare - this implies the following.

Corollary: The government is non-exact.

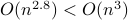

Co-observability |6 January 2022|

tags: math.OC

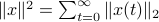

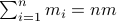

A while ago Prof. Jan H. van Schuppen published his book Control and System Theory of Discrete-Time Stochastic Systems. In this post I would like to highlight one particular concept from the book: (stochastic) co-observability, which is otherwise rarely discussed.

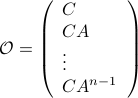

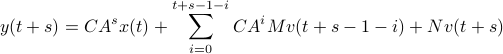

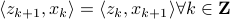

We start with recalling observability. Given a linear-time-invariant system with  ,

,  :

:

one might wonder if the initial state  can be recovered from a sequence of outputs

can be recovered from a sequence of outputs  . (This is of great use in feedback problems.) By observing that

. (This is of great use in feedback problems.) By observing that  ,

,  ,

,  one is drawn to the observability matrix

one is drawn to the observability matrix

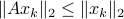

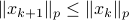

Without going into detectability, when  is full-rank, one can (uniquely) recover

is full-rank, one can (uniquely) recover  (proof by contradiction). If this is the case, we say that

(proof by contradiction). If this is the case, we say that  , or equivalenty the pair

, or equivalenty the pair  , is observable. Note that using a larger matrix (more data) is redudant by the Cayley-Hamilton theorem (If

, is observable. Note that using a larger matrix (more data) is redudant by the Cayley-Hamilton theorem (If  would not be full-rank, but by adding

would not be full-rank, but by adding  “from below” it would somehow be full-rank, one would contradict the Cayley-Hamilton theorem.).

Also note that in practice one does not “invert”

“from below” it would somehow be full-rank, one would contradict the Cayley-Hamilton theorem.).

Also note that in practice one does not “invert”  but rather uses a (Luenberger) observer (or a Kalman filter).

but rather uses a (Luenberger) observer (or a Kalman filter).

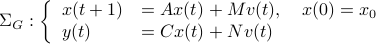

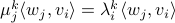

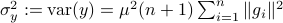

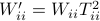

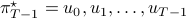

Now lets continue with a stochastic (Gaussian) system, can we do something similar? Here it will be even more important to only think about observability matrices as merely tools to assert observability. Let  be a zero-mean Gaussian random variable with covariance

be a zero-mean Gaussian random variable with covariance  and define for some matrices

and define for some matrices  and

and  the stochastic system

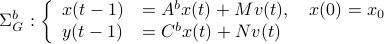

the stochastic system

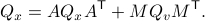

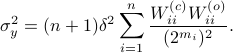

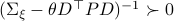

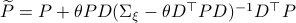

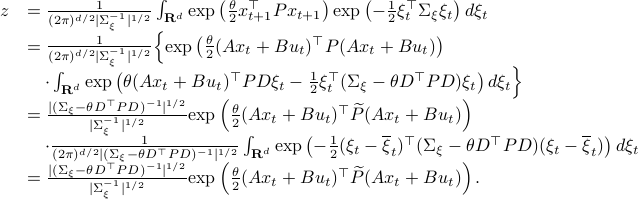

We will assume that  is asymptotically (exponentially stable) such that the Lyapunov equation describing the (invariant) state-covariance is defined:

is asymptotically (exponentially stable) such that the Lyapunov equation describing the (invariant) state-covariance is defined:

Now the support of the state  is the range of

is the range of  .

.

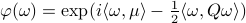

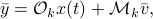

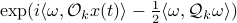

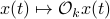

A convenient tool to analyze  will be the characteristic function of a Gaussian random variable

will be the characteristic function of a Gaussian random variable  , defined as

, defined as ![varphi(omega)=mathbf{E}[mathrm{exp}(ilangle omega, Xrangle)]](eqs/1509536698066778096-130.png) .

It can be shown that for a Gaussian random variable

.

It can be shown that for a Gaussian random variable

.

With this notation fixed, we say that

.

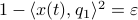

With this notation fixed, we say that  is stochastically observable on the internal

is stochastically observable on the internal  if the map

if the map

![x(t)mapsto mathbf{E}[mathrm{exp}(ilangle omega,bar{y}rangle|mathcal{F}^{x(t)}],quad bar{y}=(y(t),dots,y(t+k))in mathbf{R}^{pcdot(k+1)}quad forall omega](eqs/853891715523836887-130.png)

is injective on the support of  (note the

(note the  ). The intuition is the same as before, but now we want the state to give rise to an unique (conditional) distribution. At this point is seems rather complicated, but as it turns out, the conditions will be similar to ones from before. We start by writing down explicit expressions for

). The intuition is the same as before, but now we want the state to give rise to an unique (conditional) distribution. At this point is seems rather complicated, but as it turns out, the conditions will be similar to ones from before. We start by writing down explicit expressions for  , as

, as

we find that

for  the observability matrix corresponding to the data (length) of

the observability matrix corresponding to the data (length) of  ,

,  a matrix containing all the noise related terms and

a matrix containing all the noise related terms and  a stacked vector of noises similar to

a stacked vector of noises similar to  . It follows that

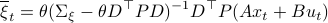

. It follows that ![x(t)mapsto mathbf{E}[mathrm{exp}(ilangle omega,bar{y})|mathcal{F}^{x(t)}]](eqs/1452358234763943133-130.png) is given by

is given by  , for

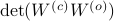

, for ![mathcal{Q}_k = mathbf{E}[mathcal{M}_kbar{v}bar{v}^{mathsf{T}}mathcal{M}_k^{mathsf{T}}]](eqs/8712945555052273745-130.png) . Injectivity of this map clearly relates directly to injectivity of

. Injectivity of this map clearly relates directly to injectivity of  . As such (taking the support into account), a neat characterization of stochastic observability is that

. As such (taking the support into account), a neat characterization of stochastic observability is that  .

.

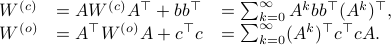

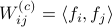

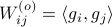

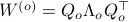

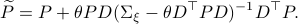

Then, to introduce the notion of stochastic co-observability we need to introduce the backward representation of a system. We term system representations like  and

and  “forward” representations as

“forward” representations as  . Assume that

. Assume that  , then see that the forward representation of a system matrix, denoted

, then see that the forward representation of a system matrix, denoted  is given by

is given by ![A^f = mathbf{E}[x(t+1)x(t)^{mathsf{T}}]Q_x^{-1}](eqs/3247376240463532393-130.png) . In a similar vein, the backward representation is given by

. In a similar vein, the backward representation is given by ![A^b=mathbf{E}[x(t-1)x(t)^{mathsf{T}}]Q_x^{-1}](eqs/3587697042984921977-130.png) .

Doing the same for the output matrix

.

Doing the same for the output matrix  yields

yields ![C^b=mathbf{E}[y(t-1)x(t)^{mathsf{T}}]Q_x^{-1}](eqs/2184503885116254462-130.png) and thereby the complete backward system

and thereby the complete backward system

Note, to keep  and

and  fixed, we adjust the distribution of

fixed, we adjust the distribution of  .

Indeed, when

.

Indeed, when  is not full-rank, the translation between forward and backward representations is not well-defined. Initial conditions

is not full-rank, the translation between forward and backward representations is not well-defined. Initial conditions  cannot be recovered.

cannot be recovered.

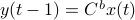

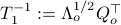

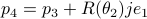

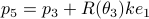

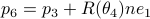

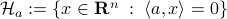

To introduce co-observability, ignore the noise for the moment and observe that  ,

,  , and so forth.

We see that when looking at observability using the backward representation, we can ask if it possible to recover the current state using past outputs. Standard observability looks at past states instead. With this in mind we can define stochastic co-observability on the interval

, and so forth.

We see that when looking at observability using the backward representation, we can ask if it possible to recover the current state using past outputs. Standard observability looks at past states instead. With this in mind we can define stochastic co-observability on the interval  be demanding that the map

be demanding that the map

![x(t)mapsto mathbf{E}[mathrm{exp}(ilangle omega,bar{y}^brangle|mathcal{F}^{x(t)}],quad bar{y}^b=(y(t-1),dots,y(t-k-1))in mathbf{R}^{pcdot(k+1)}quad forall omega](eqs/2807210638750947645-130.png)

is injective on the support of  (note the

(note the  ). Of course, one needs to make sure that

). Of course, one needs to make sure that  is defined. It is no surprise that the conditions for stochastic co-observability will also be similar, but now using the co-observability matrix. What is however remarkable, is that these notions do not always coincide.

is defined. It is no surprise that the conditions for stochastic co-observability will also be similar, but now using the co-observability matrix. What is however remarkable, is that these notions do not always coincide.

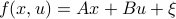

Lets look at when this can happen and what this implies. One reason to study these kind of questions is to say something about (minimal) realizations of stochastic processes. Simply put, what is the smallest (as measured by the dimension of the state  ) system

) system  that gives rise to a certain output process. When observability and co-observability do not agree, this indicates that the representation is not minimal. To get some intuition, we can do an example as adapted from the book. Consider the scalar (forward) Gaussian system

that gives rise to a certain output process. When observability and co-observability do not agree, this indicates that the representation is not minimal. To get some intuition, we can do an example as adapted from the book. Consider the scalar (forward) Gaussian system

for  . The system is stochastically observable as

. The system is stochastically observable as  and

and  . Now for stochastic co-observability we see that

. Now for stochastic co-observability we see that ![c^b=mathbf{E}[y(t-1)x(t)]q_x^{-1}]=0](eqs/6755659394537019237-130.png) , as such the system is not co-observable. What this shows is that

, as such the system is not co-observable. What this shows is that  behaves as a Gaussian random variable, no internal dynamics are at play and such a minimal realization is of dimension

behaves as a Gaussian random variable, no internal dynamics are at play and such a minimal realization is of dimension  .

.

For this and a lot more, have a look at the book!

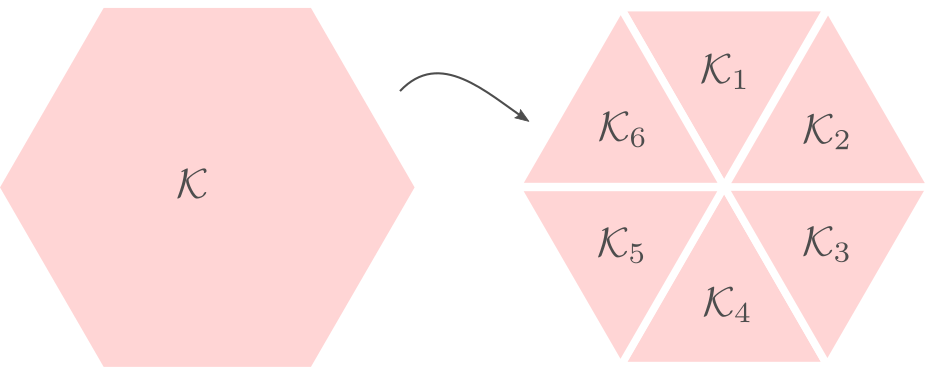

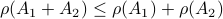

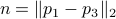

Fair convex partitioning |26 September 2021|

tags: math.OC

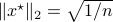

When learning about convex sets, the definitions seem so clean that perhaps you think all is known what could be known about finite-dimensional convex geometry. In this short note we will look at a problem which is still largely open beyond the planar case. This problem is called the fair partitioning problem.

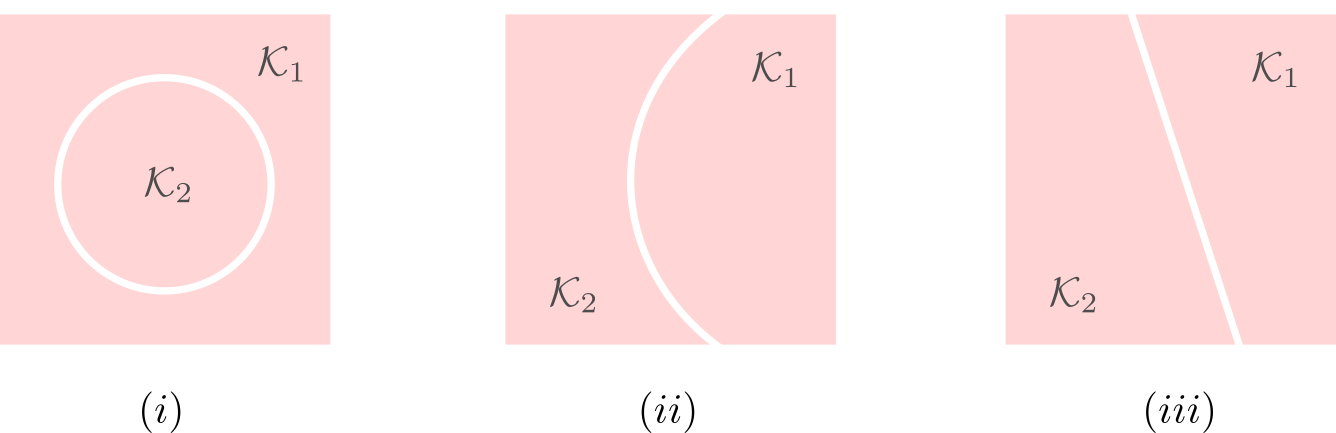

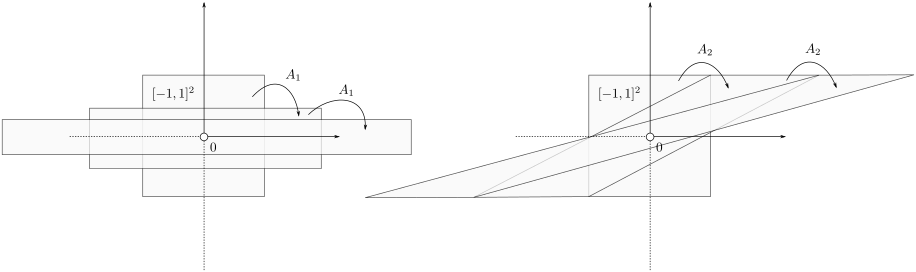

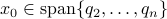

In the  -dimensional case, the question is the following, given any integer

-dimensional case, the question is the following, given any integer  , can a convex set

, can a convex set  be divided into

be divided into  convex sets all of equal area and perimeter.

Differently put, does there exist a fair convex partitioning, see Figure 1.

convex sets all of equal area and perimeter.

Differently put, does there exist a fair convex partitioning, see Figure 1.

|

Figure 1:

A partition of |

This problem was affirmatively solved in 2018, see this paper.

As you can see, this work was updated just a few months ago. The general proof is involved, lets see if we can do the case for a compact set and  .

.

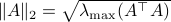

First of all, when splitting  (into just two sets) you might think of many different methods to do so. What happens when the line is curved? The answer is that when the line is curved, one of the resulting sets must be non-convex, compare the options in Figure 2.

(into just two sets) you might think of many different methods to do so. What happens when the line is curved? The answer is that when the line is curved, one of the resulting sets must be non-convex, compare the options in Figure 2.

|

Figure 2:

A partitioning of the square in |

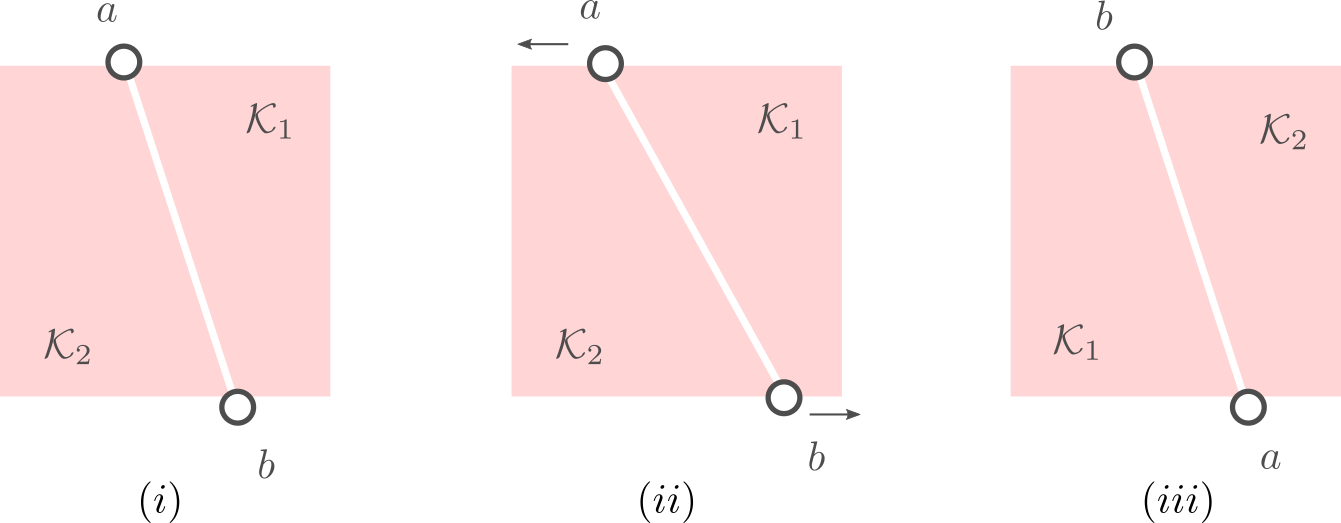

This observation is particularly useful as it implies we only need to look at two points on the boundary of  (and the line between them). As

(and the line between them). As  is compact we can always select a cut such that the resulting perimeters of

is compact we can always select a cut such that the resulting perimeters of  and

and  are equal.

are equal.

Let us assume that the points  and

and  in Figure 3.(i) are like that. If we start moving them around with equal speed, the resulting perimeters remain fixed. Better yet, as the cut is a straight-line, the volumes (area) of the resulting set

in Figure 3.(i) are like that. If we start moving them around with equal speed, the resulting perimeters remain fixed. Better yet, as the cut is a straight-line, the volumes (area) of the resulting set  and

and  change continuously. Now the result follows from the Intermediate Value Theorem and seeing that we can flip the meaning of

change continuously. Now the result follows from the Intermediate Value Theorem and seeing that we can flip the meaning of  and

and  , see Figure 3.(iii).

, see Figure 3.(iii).

|

Figure 3:

By moving the points |

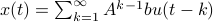

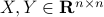

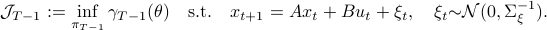

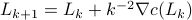

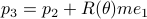

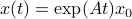

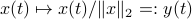

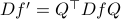

Solving Linear Programs via Isospectral flows |05 September 2021|

tags: math.OC, math.DS, math.DG

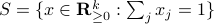

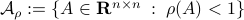

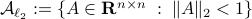

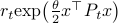

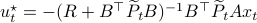

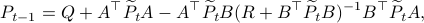

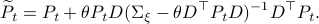

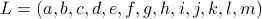

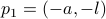

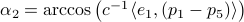

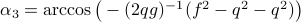

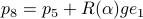

In this post we will look at one of the many remarkable findings by Roger W. Brockett. Consider a Linear Program (LP)

parametrized by the compact set  and a suitable triple

and a suitable triple  .

As a solution to

.

As a solution to  can always be found to be a vertex of

can always be found to be a vertex of  , a smooth method to solve

, a smooth method to solve  seems somewhat awkward.

We will see that one can construct a so-called isospectral flow that does the job.

Here we will follow Dynamical systems that sort lists, diagonalize matrices and solve linear programming problems, by Roger. W. Brockett (CDC 1988) and the book Optimization and Dynamical Systems edited by Uwe Helmke and John B. Moore (Springer 2ed. 1996).

Let

seems somewhat awkward.

We will see that one can construct a so-called isospectral flow that does the job.

Here we will follow Dynamical systems that sort lists, diagonalize matrices and solve linear programming problems, by Roger. W. Brockett (CDC 1988) and the book Optimization and Dynamical Systems edited by Uwe Helmke and John B. Moore (Springer 2ed. 1996).

Let  have

have  vertices, then one can always find a map

vertices, then one can always find a map  , mapping the simplex

, mapping the simplex  onto

onto  .

Indeed, with some abuse of notation, let

.

Indeed, with some abuse of notation, let  be a matrix defined as

be a matrix defined as  , for

, for  , the vertices of

, the vertices of  .

.

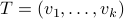

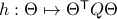

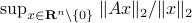

Before we continue, we need to establish some differential geometric results. Given the Special Orthogonal group  , the tangent space is given by

, the tangent space is given by  . Note, this is the explicit formulation, which is indeed equivalent to shifting the corresponding Lie Algebra.

The easiest way to compute this is to look at the kernel of the map defining the underlying manifold.

. Note, this is the explicit formulation, which is indeed equivalent to shifting the corresponding Lie Algebra.

The easiest way to compute this is to look at the kernel of the map defining the underlying manifold.

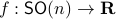

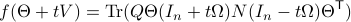

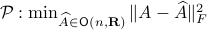

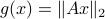

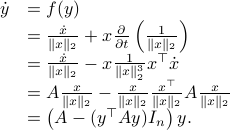

Now, following Brockett, consider the function  defined by

defined by  for some

for some  . This approach is not needed for the full construction, but it allows for more intuition and more computations.

To construct the corresponding gradient flow, recall that the (Riemannian) gradient at

. This approach is not needed for the full construction, but it allows for more intuition and more computations.

To construct the corresponding gradient flow, recall that the (Riemannian) gradient at  is defined via

is defined via ![df(Theta)[V]=langle mathrm{grad},f(Theta), Vrangle_{Theta}](eqs/666734593389601294-130.png) for all

for all  . Using the explicit tangent space representation, we know that

. Using the explicit tangent space representation, we know that  with

with  .

Then, see that by using

.

Then, see that by using

we obtain the gradient via

![df(Theta)[V]=lim_{tdownarrow 0}frac{f(Theta+tV)-f(Theta)}{t} = langle QTheta N, Theta Omega rangle - langle Theta N Theta^{mathsf{T}} Q Theta, Theta Omega rangle.](eqs/2651725126600601485-130.png)

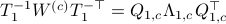

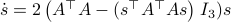

This means that (the minus is missing in the paper) the (a) gradient flow becomes

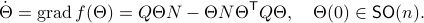

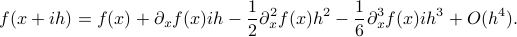

Consider the standard commutator bracket ![[A,B]=AB-BA](eqs/2726051070798907854-130.png) and see that for

and see that for  one obtains from the equation above (typo in the paper)

one obtains from the equation above (typo in the paper)

![dot{H}(t) = [H(t),[H(t),N]],quad H(0)=Theta^{mathsf{T}}QThetaquad (2).](eqs/8308117426617579905-130.png)

Hence,  can be seen as a reparametrization of a gradient flow.

It turns out that

can be seen as a reparametrization of a gradient flow.

It turns out that  has a variety of remarkable properties. First of all, see that

has a variety of remarkable properties. First of all, see that  preserves the eigenvalues of

preserves the eigenvalues of  .

Also, observe the relation between extremizing

.

Also, observe the relation between extremizing  and the function

and the function  defined via

defined via  . The idea to handle LPs is now that the limiting

. The idea to handle LPs is now that the limiting  will relate to putting weight one the correct vertex to get the optimizer,

will relate to putting weight one the correct vertex to get the optimizer,  gives you this weight as it will contain the corresponding largest costs.

gives you this weight as it will contain the corresponding largest costs.

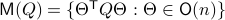

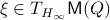

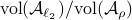

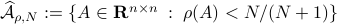

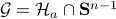

In fact, the matrix  can be seen as an element of the set

can be seen as an element of the set  .

This set is in fact a

.

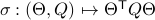

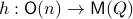

This set is in fact a  -smooth compact manifold as it can be written as the orbit space corresponding to the group action

-smooth compact manifold as it can be written as the orbit space corresponding to the group action  ,

,

, one can check that this map satisfies the group properties. Hence, to extremize

, one can check that this map satisfies the group properties. Hence, to extremize  over

over  , it appears to be appealing to look at Riemannian optimization tools indeed. When doing so, it is convenient to understand the tangent space of

, it appears to be appealing to look at Riemannian optimization tools indeed. When doing so, it is convenient to understand the tangent space of  . Consider the map defining the manifold

. Consider the map defining the manifold  ,

,  . Then by the construction of

. Then by the construction of  , see that

, see that ![dh(Theta)[V]=0](eqs/8898228761859333701-130.png) yields the relation

yields the relation ![[H,Omega]=0](eqs/197549272010137556-130.png) for any

for any  .

.

For the moment, let  such that

such that  and

and  .

First we consider the convergence of

.

First we consider the convergence of  .

Let

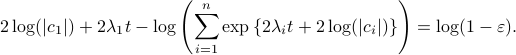

.

Let  have only distinct eigenvalues, then

have only distinct eigenvalues, then  exists and is diagonal.

Using the objective

exists and is diagonal.

Using the objective  from before, consider

from before, consider  and see that by using the skew-symmetry one recovers the following

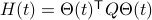

and see that by using the skew-symmetry one recovers the following

![begin{array}{lll} frac{d}{dt}mathrm{Tr}(H(t)N) &=& mathrm{Tr}(N [H,[H,N]]) &=& -mathrm{Tr}((HN-NH)^2) &=& |HN-NH|_F^2. end{array}](eqs/6018522323896655448-130.png)

This means the cost monotonically increases, but by compactness converges to some point  . By construction, this point must satisfy

. By construction, this point must satisfy ![[H_{infty},N]=0](eqs/5408610946697229240-130.png) . As

. As  has distinct eigenvalues, this can only be true if

has distinct eigenvalues, this can only be true if  itself is diagonal (due to the distinct eigenvalues).

itself is diagonal (due to the distinct eigenvalues).

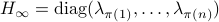

More can be said about  , let

, let  be the eigenvalues of

be the eigenvalues of  , that is, they correspond to the eigenvalues of

, that is, they correspond to the eigenvalues of  as defined above.

Then as

as defined above.

Then as  preserves the eigenvalues of

preserves the eigenvalues of  (

( ), we must have

), we must have  , for

, for  a permutation matrix.

This is also tells us there is just a finite number of equilibrium points (finite number of permutations). We will write this sometimes as

a permutation matrix.

This is also tells us there is just a finite number of equilibrium points (finite number of permutations). We will write this sometimes as  .

.

Now as  is one of those points, when does

is one of those points, when does  converge to

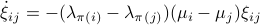

converge to  ? To start this investigation, we look at the linearization of

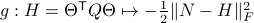

? To start this investigation, we look at the linearization of  , which at an equilibrium point

, which at an equilibrium point  becomes

becomes

for  . As we work with matrix-valued vector fields, this might seems like a duanting computation. However, at equilibrium points one does not need a connection and can again use the directional derivative approach, in combination with the construction of

. As we work with matrix-valued vector fields, this might seems like a duanting computation. However, at equilibrium points one does not need a connection and can again use the directional derivative approach, in combination with the construction of  , to figure out the linearization. The beauty is that from there one can see that

, to figure out the linearization. The beauty is that from there one can see that  is the only asymptotically stable equilibrium point of

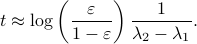

is the only asymptotically stable equilibrium point of  . Differently put, almost all initial conditions

. Differently put, almost all initial conditions  will converge to

will converge to  with the rate captured by spectral gaps in

with the rate captured by spectral gaps in  and

and  . If

. If  does not have distinct eigenvalues and we do not impose any eigenvalue ordering on

does not have distinct eigenvalues and we do not impose any eigenvalue ordering on  , one sees that an asymptotically stable equilibrium point

, one sees that an asymptotically stable equilibrium point  must have the same eigenvalue ordering as

must have the same eigenvalue ordering as  . This is the sorting property of the isospectral flow and this is of use for the next and final statement.

. This is the sorting property of the isospectral flow and this is of use for the next and final statement.

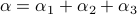

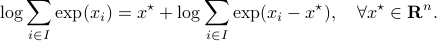

Theorem:

Consider the LP  with

with  for all

for all ![i,jin [k]](eqs/5752860898065984697-130.png) , then, there exist diagonal matrices

, then, there exist diagonal matrices  and

and  such that

such that  converges for almost any

converges for almost any  to

to  with the optimizer of

with the optimizer of  being

being  .

.

Proof:

Global convergence is prohibited by the topology of  .

Let

.

Let  and let

and let  . Then, the isospectral flow will converge from almost everywhere to

. Then, the isospectral flow will converge from almost everywhere to  (only

(only  ), such that

), such that  .

.

Please consider the references for more on the fascinating structure of  .

.

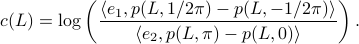

The Imaginary trick |12 March 2021|

tags: math.OC

Most of us will learn at some point in our life that  is problematic, as which number multiplied by itself can ever be negative?

To overcome this seemingly useless defiency one learns about complex numbers and specifically, the imaginary number

is problematic, as which number multiplied by itself can ever be negative?

To overcome this seemingly useless defiency one learns about complex numbers and specifically, the imaginary number  , which is defined to satisfy

, which is defined to satisfy  .

At this point you should have asked yourself when on earth is this useful?

.

At this point you should have asked yourself when on earth is this useful?

In this short post I hope to highlight - from a slightly different angle most people grounded in physics would expect - that the complex numbers are remarkably useful.

Complex numbers, and especially the complex exponential, show up in a variety of contexts, from signal processing to statistics and quantum mechanics. With of course most notably, the Fourier transformation.

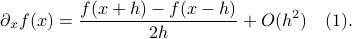

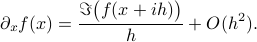

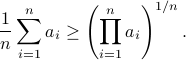

We will however look at something completely different. It can be argued that in the late 60s James Lyness and Cleve Moler brought to life a very elegant new approach to numerical differentiation. To introduce this idea, recall that even nowadays the most well-known approach in numerical differentiation is to use some sort of finite-difference method, for example, for any  one could use the central-difference method

one could use the central-difference method

Now one might be tempted to make  extremely small, as then the error must vanish!

However, numerically, for a very small

extremely small, as then the error must vanish!

However, numerically, for a very small  the two function evaluations

the two function evaluations  and

and  will be indistinguishable.

So although the error scales as

will be indistinguishable.

So although the error scales as  there is some practical lower bound on this error based on the machine precision of your computer.

One potential application of numerical derivatives is in the context of zeroth-order (derivative-free) optimization.

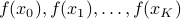

Say you want to adjust van der Poel his Canyon frame such that he goes even faster, you will not have access to explicit gradients, but you can evaluate the performance of a change in design, for example in a simulator. So what you usually can obtain is a set of function evaluations

there is some practical lower bound on this error based on the machine precision of your computer.

One potential application of numerical derivatives is in the context of zeroth-order (derivative-free) optimization.

Say you want to adjust van der Poel his Canyon frame such that he goes even faster, you will not have access to explicit gradients, but you can evaluate the performance of a change in design, for example in a simulator. So what you usually can obtain is a set of function evaluations  . Given this data, a somewhat obvious approach is to mimick first-order algorithms

. Given this data, a somewhat obvious approach is to mimick first-order algorithms

where  is some stepsize. For example, one could replace

is some stepsize. For example, one could replace  in

in  by the central-difference approximation

by the central-difference approximation  .

Clearly, if the objective function

.

Clearly, if the objective function  is well-behaved and the approximation of

is well-behaved and the approximation of  is reasonably good, then something must come out?

As was remarked before, if your approximation - for example due to numerical cancellation errors - will always have a bias it is not immediate how to construct a high-performance zeroth-order optimization algorithm. Only if there was a way to have a numerical approximation without finite differencing?

is reasonably good, then something must come out?

As was remarked before, if your approximation - for example due to numerical cancellation errors - will always have a bias it is not immediate how to construct a high-performance zeroth-order optimization algorithm. Only if there was a way to have a numerical approximation without finite differencing?

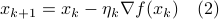

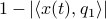

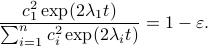

Let us assume that  is sufficiently smooth, let

is sufficiently smooth, let  be the imaginary number and consider the following series

be the imaginary number and consider the following series

From this expression it follows that

So we see that by passing to the complex domain and projecting the imaginary part back, we obtain a numerical method to construct approximations of derivatives without even the possibility of cancellation errors. This remarkable property makes it a very attractive candidate to be used in zeroth-order optimization algorithms, which is precisely what we investigated in our new pre-print. It turns out that convergence is not only robust, but also very fast!

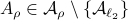

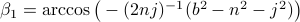

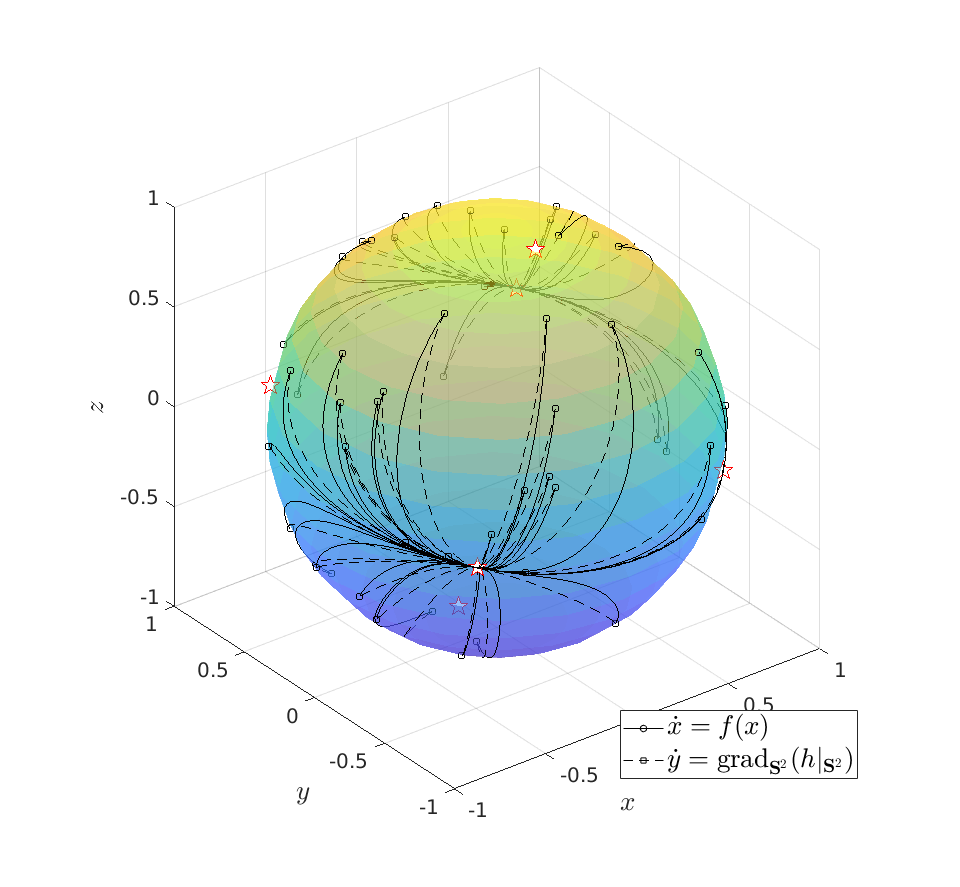

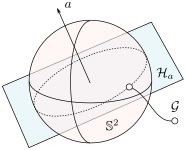

Topological Considersations in Stability Analysis |17 November 2020|

tags: math.DS

In this post we discuss very classical, yet, highly underappreciated topological results.

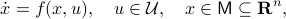

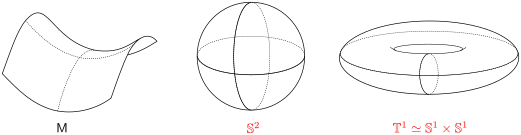

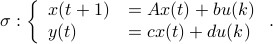

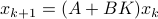

We will look at dynamical control systems of the form

where  is some finite-dimensional embedded manifold.

is some finite-dimensional embedded manifold.

As it turns out, topological properties of  encode a great deal of fundamental control possibilities, most notably, if a continuous globally asymptotically stabilizing control law exists or not (usually, one can only show the negative). In this post we will work towards two things, first, we show that a technically interesting nonlinear setting is that of a dynamical system evolving on a compact manifold, where we will discuss that the desirable continuous feedback law can never exist, yet, the boundedness implied by the compactness does allow for some computations. Secondly, if one is determined to work on systems for which a continuous globally asymptotically stable feedback law exists, then a wide variety of existential sufficient conditions can be found, yet, the setting is effectively Euclidean, which is the most basic nonlinear setting.

encode a great deal of fundamental control possibilities, most notably, if a continuous globally asymptotically stabilizing control law exists or not (usually, one can only show the negative). In this post we will work towards two things, first, we show that a technically interesting nonlinear setting is that of a dynamical system evolving on a compact manifold, where we will discuss that the desirable continuous feedback law can never exist, yet, the boundedness implied by the compactness does allow for some computations. Secondly, if one is determined to work on systems for which a continuous globally asymptotically stable feedback law exists, then a wide variety of existential sufficient conditions can be found, yet, the setting is effectively Euclidean, which is the most basic nonlinear setting.

To start the discussion, we need one important topological notion.

Definition [Contractability].

Given a topological manifold  . If the map

. If the map  is null-homotopic, then,

is null-homotopic, then,  is contractible.

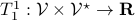

is contractible.

To say that a space is contractible when it has no holes (genus  ) is wrong, take for example the sphere. The converse is true, any finite-dimensional space with a hole cannot be contractible. See Figure 1 below.

) is wrong, take for example the sphere. The converse is true, any finite-dimensional space with a hole cannot be contractible. See Figure 1 below.

|

Figure 1:

The manifold |

Topological Obstructions to Global Asymptotic Stability

In this section we highlight a few (not all) of the most elegant topological results in stability theory. We focus on results without appealing to the given or partially known vector field  .

This line of work finds its roots in work by Bhatia, Szegö, Wilson, Sontag and many others.

.

This line of work finds its roots in work by Bhatia, Szegö, Wilson, Sontag and many others.

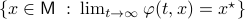

Theorem [The domain of attraction is contractible, Theorem 21]

Let the flow  be continuous and let

be continuous and let  be an asymptotically stable equilibrium point. Then, the domain of attraction

be an asymptotically stable equilibrium point. Then, the domain of attraction  is contractible.

is contractible.

This theorem by Sontag states that the underlying topology of a dynamical system, better yet, the space on which the dynamical system evolves, dictates what is possible. Recall that linear manifolds are simply hyperplanes, which are contractible and hence, as we all know, global asymptotic stability is possible for linear systems. However, if  is not contractible, there does not exist a globally asymptotically stabilizable continuous-time system on

is not contractible, there does not exist a globally asymptotically stabilizable continuous-time system on  , take for example any sphere.

, take for example any sphere.

The next example shows that one should not underestimate ‘‘linear systems’’ either.

Example [Dynamical Systems on  ]

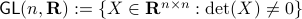

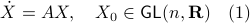

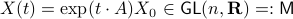

Recall that

]

Recall that  is a smooth

is a smooth  -dimensional manifold (Lie group).

Then, consider for some

-dimensional manifold (Lie group).

Then, consider for some  ,

,  the (right-invariant) system

the (right-invariant) system

Indeed, the solution to (1) is given by  . Since this group consists out of two disjoint components there does not exist a matrix

. Since this group consists out of two disjoint components there does not exist a matrix  , or continuous nonlinear map for that matter, which can make a vector field akin to (1) globally asymptotically stable. This should be contrasted with the closely related ODE

, or continuous nonlinear map for that matter, which can make a vector field akin to (1) globally asymptotically stable. This should be contrasted with the closely related ODE  ,

,  . Even for the path connected component

. Even for the path connected component  , The theorem by Sontag obstructs the existence of a continuous global asymptotically stable vector field. This because the group is not simply connected for

, The theorem by Sontag obstructs the existence of a continuous global asymptotically stable vector field. This because the group is not simply connected for  (This follows most easily from establishing the homotopy equivalence between

(This follows most easily from establishing the homotopy equivalence between  and

and  via (matrix) Polar Decomposition), hence the fundamental group is non-trivial and contractibility is out of the picture. See that if one would pick

via (matrix) Polar Decomposition), hence the fundamental group is non-trivial and contractibility is out of the picture. See that if one would pick  to be stable (Hurwitz), then for

to be stable (Hurwitz), then for  we have

we have  , however

, however  .

.

Following up on Sontag, Bhat and Bernstein revolutionized the field by figuring out some very important ramifications (which are easy to apply). The key observation is the next lemma (that follows from intersection theory arguments, even for non-orientable manifolds).

Lemma [Proposition 1] Compact, boundaryless, manifolds are never contractible.

Clearly, we have to ignore  -dimensional manifolds here. Important examples of this lemma are the

-dimensional manifolds here. Important examples of this lemma are the  -sphere

-sphere  and the rotation group

and the rotation group  as they make a frequent appearance in mechanical control systems, like a robotic arm.

Note that the boundaryless assumption is especially critical here.

as they make a frequent appearance in mechanical control systems, like a robotic arm.

Note that the boundaryless assumption is especially critical here.

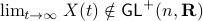

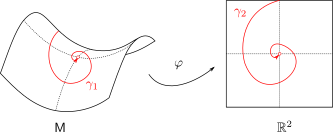

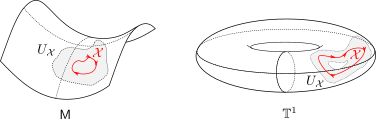

Now the main generalization by Bhat and Bernstein with respect to the work of Sontag is to use a (vector) bundle construction of  .

Loosely speaking, given a base

.

Loosely speaking, given a base  and total manifold

and total manifold  ,

,  is a vector bundle when for the surjective map

is a vector bundle when for the surjective map  and each

and each  we have that the fiber

we have that the fiber  is a finite-dimensional vector space (see the great book by Abraham, Marsden and Ratiu Section 3.4 and/or the figure below).

is a finite-dimensional vector space (see the great book by Abraham, Marsden and Ratiu Section 3.4 and/or the figure below).

|

Figure 2:

A prototypical vector bundle, in this case the cylinder |

Intuitively, vector bundles can be thought of as manifolds with vector spaces attached at each point, think of a cylinder living in  , where the base manifold is

, where the base manifold is  and each fiber is a line through

and each fiber is a line through  extending in the third direction, as exactly what the figure shows. Note, however, this triviality only needs to hold locally.

extending in the third direction, as exactly what the figure shows. Note, however, this triviality only needs to hold locally.

A concrete example is a rigid body with  , for which a large amount of papers claim to have designed continuous globally asymptotically stable controllers. The fact that this was so enormously overlooked makes this topological result not only elegant from a mathematical point of view, but it also shows its practical value.

The motivation of this post is precisely to convey this message, topological insights can be very fruitful.

, for which a large amount of papers claim to have designed continuous globally asymptotically stable controllers. The fact that this was so enormously overlooked makes this topological result not only elegant from a mathematical point of view, but it also shows its practical value.

The motivation of this post is precisely to convey this message, topological insights can be very fruitful.

Now we can state their main result

Theorem [Theorem 1]

Let  be a compact, boundaryless, manifold, being the base of the vector bundle

be a compact, boundaryless, manifold, being the base of the vector bundle  with

with  , then, there is no continuous vector field on

, then, there is no continuous vector field on  with a global asymptotically stable equilibrium point.

with a global asymptotically stable equilibrium point.

Indeed, compactness of  can also be relaxed to

can also be relaxed to  not being contractible as we saw in the example above, however, compactness is in most settings more tangible to work with.

not being contractible as we saw in the example above, however, compactness is in most settings more tangible to work with.

Example [The Grassmannian manifold]

The Grassmannian manifold, denoted by  , is the set of all

, is the set of all  -dimensional subspaces

-dimensional subspaces  . One can identify

. One can identify  with the Stiefel manifold

with the Stiefel manifold  , the manifold of all orthogonal

, the manifold of all orthogonal  -frames, in the bundle sense of before, that is

-frames, in the bundle sense of before, that is  such that the fiber

such that the fiber  represent all

represent all  -frames generating the subspace

-frames generating the subspace  . In its turn,

. In its turn,  can be indentified with the compact Lie group

can be indentified with the compact Lie group  (via a quotient), such that indeed

(via a quotient), such that indeed  is compact.

This manifold shows up in several optimization problems and from our continuous-time point of view we see that one can never find, for example, a globally converging gradient-flow like algorithm.

is compact.

This manifold shows up in several optimization problems and from our continuous-time point of view we see that one can never find, for example, a globally converging gradient-flow like algorithm.

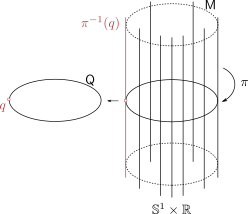

Example [Tangent Bundles]

By means of a Lagrangian viewpoint, a lot of mechanical systems are of a second-order nature, this means they are defined on the tangent bundle of some  , that is,

, that is,  . However, then, if the configuration manifold

. However, then, if the configuration manifold  is compact, we can again appeal to the Theorem by Bhat and Bernstein. For example, Figure 2 can relate to the manifold over which the pair